Week 3

Findings from User Testing:

Linna: Needs more work on stylization of page, color scheme is not great for contrast, UI is messy and hard to handle

Buttons aren’t obvious what they do.

Alanna: picks up the UI very fast, but she is very quick and presses on every button she can to see what it does. A unique test as someone who doesn’t use music software

Josh: Picked up looping function very quickly, otherwise UI is clunky and not obvious to the user what it does

What I learned: encourage the user to try out every function, make the UI icons more obvious what they are, lead the user in a direction of things to press on without having to explain it to them

Week 3

my mockup this week https://www.figma.com/design/GR39lkSI20EedStbZwchca/e-mockup?node-id=0-1&t=LWCDceSNzPqQLNQ2-1

Try mockups in webflow next time

- talk to tanika about sound

- think about how to navigate time of songs through words, time markers for intro, verse, hook, can be commented by users or as skip points like in serato but labeled. Can be Labeled by artist or users

animation of picking up and dropping little vinyl on dj track with mouse.

think about how to intitively modify sound by squeezing it to make it faster with touch gestures instead of a waveform view

https://www.instagram.com/yehwan.yen.song/?hl=en

Search bar filtering inspiration: https://canopycanopycanopy.com/search

https://www.pinterest.com/search/pins/?rs=ac&len=2&q=sound%20representation&eq=sound%20repre&etslf=8631

https://mrdoob.com/lab/javascript/waveform/

feature ideas:

AI or algo to figure out where the most commonly played parts of the song is, artist uploader can make the sections of their own when they upload

Algo recommended songs that would fit well (tempo, style, key wise) to mix into the next track

Labeling the song waveform by lyrics, searchable section by lyrics, song search by lyric

Search music by keywords (ex: kpop-ish, genres, “something like mac demarco”, pop trends, “2010’s britney spears pop electronic”)

Inituitive game-fied keyboard and mouse controls

Touch gestures for mobile

MIDI support

Allow users to access their own downloaded files or open “USBs” on each deck. Offline mode where the tracks they use aren’t uploaded to Eterna and aren’t able to be livestreamed for copyright reasons

live steaming platform

hire a dj for your wedding remotely???

dj livestream - DJ together online collaboratively

viewers can make requests to your set (requires donation to request a song)

Interview (What would you like to to see? How would you use this platform?):

Daedelus - Alfred Darlington - Producer / Electronic Live Performer

Doctor Jeep - Andre Lira - Producer / DJ

Otis Zheng - Pop Musician

LastFM esque recommendation algo based on user tags - Lily’s suggestion / Project

Creative Commons esque licenses that you can choose between as an uploader - Jasmine’s suggestion

Otis Zheng

sample loop batch exporting, export loops from each track, or make an editable arrangement track(s) available for the user to place and layer their loops

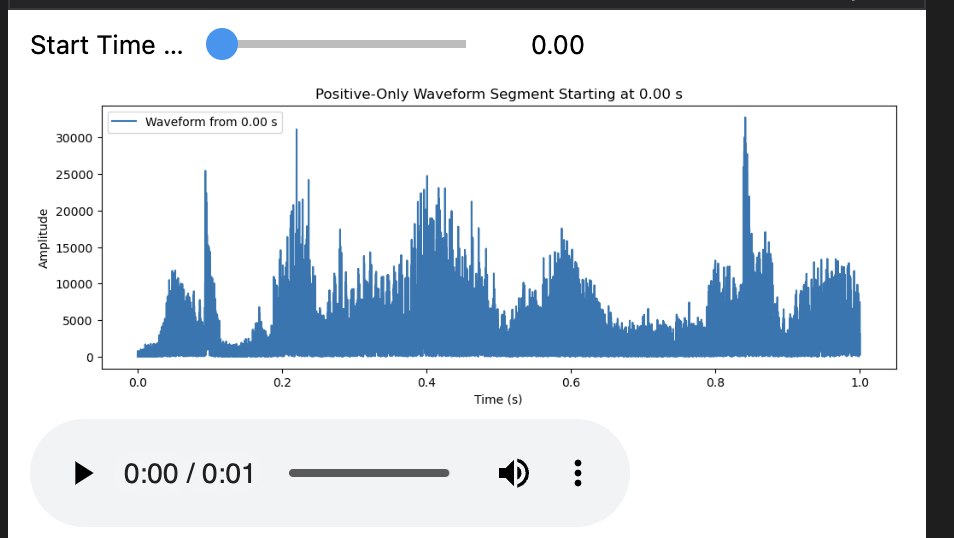

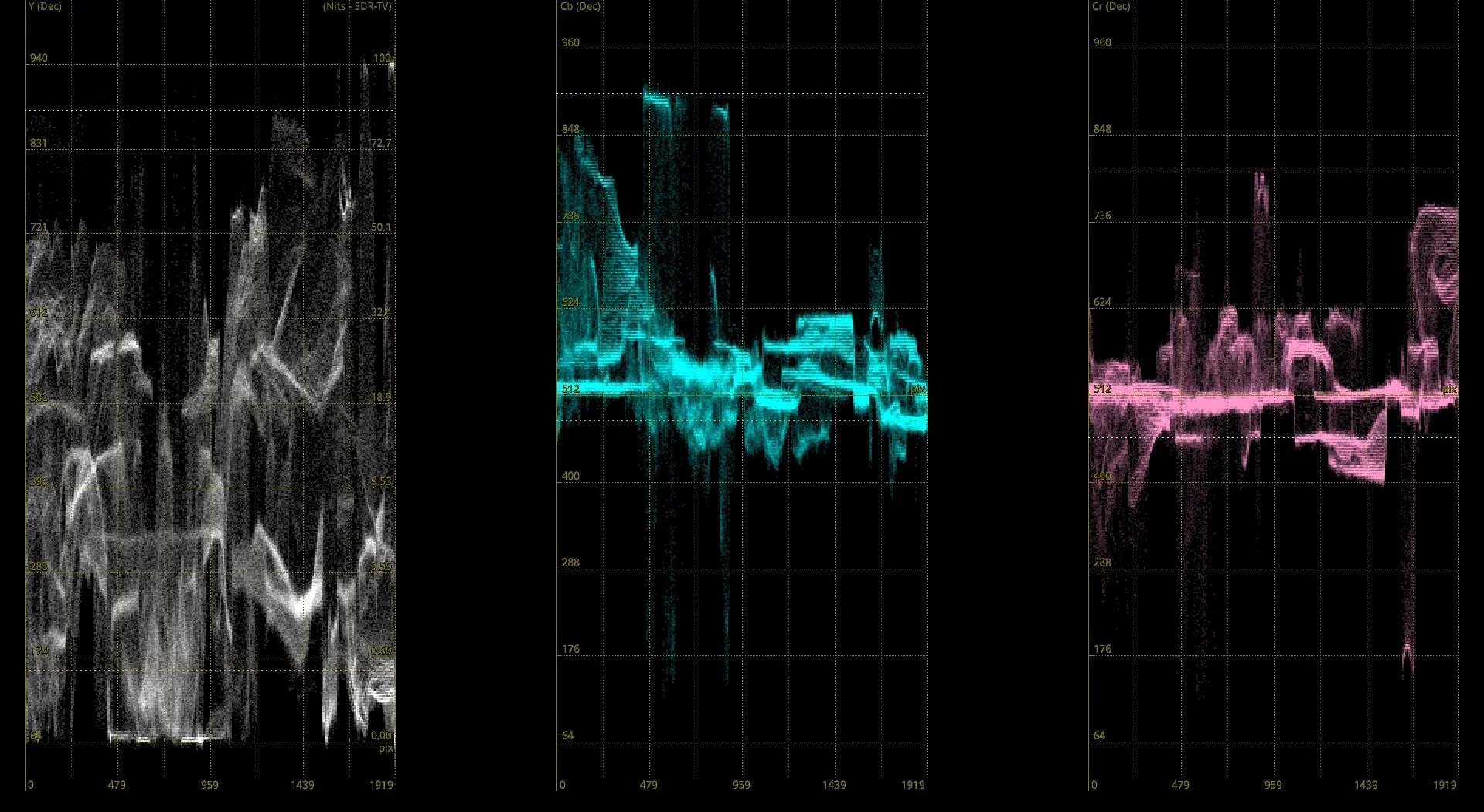

Volume over time representation:

instead of volume over time with postive and negative phase visable

1. Combine stereo left and right into a sum for a mono waveform

2. Take the highest peaks of the postive and negative phases

3. Invert the highest negative peaks to positive phase and overwrite the positive phase

4. View the loudest parts of the waveform in a linear mountainous view that is more intuitive to the user

Users don’t need to see phase representation so the full waveform is not necessary

Simply the interface for utility

PHASE IS NECESSARRY TO VIEW AT SOME POINTS. LET THE USER CHOOSE TO VIEW COMBINED PHASE OR TRADTIONAL DUAL PHASE VIEW

Use playlisting as data labeling for similarity checks, recommendations to each user. Similar to pinterest recommendations based on which songs are in playlists together.

Ask users to label songs themselves such as “chill”, “rock”, “hyperpop”, “Boom Bap”

Create recommendation playlists based on user label names

LastFM esque data tracking (artist, song title, album, play duration) are logged.

Listening trends over time (e.g., genre preferences, seasonal trends).

Each time a user plays a song on a supported platform (e.g., Spotify, iTunes), the track details (artist, song title, album, play duration) are logged.

2. User Profile Creation

The scrobbled data is aggregated to create a detailed user profile. This includes:

Most listened-to artists, albums, and songs.

Listening trends over time (e.g., genre preferences, seasonal trends).

Behavioral data like skipping songs or repeating tracks.

3. Collaborative Filtering

This approach looks for patterns in user behavior:

If User A and User B listen to a lot of the same artists, Last.fm assumes they have similar tastes.

Recommendations are generated by suggesting artists, albums, or tracks that User B listens to but User A hasn’t discovered yet.

Collaborative filtering relies on the assumption that similar users enjoy similar music.

4. Content-Based Filtering

Last.fm also uses metadata about songs, artists, and genres to make recommendations:

Tags: Last.fm has a tag-based system where users label songs with tags like "rock," "chill," "indie," etc.

Features: The algorithm considers attributes such as tempo, mood, and genre.

This method is independent of user data and focuses on the intrinsic properties of the music.

5. Hybrid Recommendation System

To improve accuracy, Last.fm combines collaborative and content-based approaches:

Collaborative filtering can provide diverse suggestions based on user behavior.

Content-based filtering ensures recommendations remain relevant even when user behavior data is sparse.

6. Community-Driven Enhancements

Last.fm leverages its community to refine recommendations:

Crowdsourced tags and playlists enhance metadata accuracy.

User reviews and ratings inform the algorithm about popular and niche tracks.

7. Machine Learning Models

The core recommendation engine is often powered by machine learning:

Clustering: Grouping users or songs into clusters based on similarity.

Classification: Predicting a user’s preference for a song based on historical data.

Neural networks: Advanced systems may analyze complex patterns in listening habits.

8. Feedback Loop

The system learns over time by tracking how users interact with recommendations:

Positive signals: If a user listens to a recommended song entirely or adds it to a playlist.

Negative signals: If a user skips or dislikes a track.

9. Network Effects

Since Last.fm has a large user base, it benefits from the network effect:

More users mean more scrobbled data, which enhances the algorithm’s training and accuracy.

Follow other users who are good playlisters. Good playlisters will act as tastemakers for other users to follow.

Follow other users recommendation algorithms.

Switch between your recommendation algo, no algo, user customized algo, or an algorithm of (or made by) another user

VSTs Plugins on the web;: https://synthanatomy.com/2018/10/web-audio-modules-play-plugins-google-chrome.html

WAM Standard: https://www.youtube.com/watch?v=w7a_Kbx7nA8

Javascript Plugins: https://www.nickwritesablog.com/audio-plugins-with-javascript/

https://www.youtube.com/watch?v=K8Knf9b4ark

https://www.webaudiomodules.com/

https://github.com/boourns/wam-community

VSTS ARE TOO COMPLICATED USE RNBO FX https://rnbo.cycling74.com/explore/rnbo-pedals

https://www.reddit.com/r/webdev/comments/hovpoo/audiomass_edit_audio_files_trim_eq_fade_reverb_a/https://github.com/pkalogiros/audiomass

https://github.com/pkalogiros/audiomass

This one uses Web Audio API

Web Audio API

LET PEOPLE DOWNLOAD TRACKS AND USE USERS AS PEER TO PEER SERVERS TO SAVE ON SERVER COSTS

Response to VIO's Week 2 update

At the first time I met Vio, I had a hard time understanding why she left her job in big tech to come to ITP. For me, as someone coming from studying at an art school for the past 4 years. Job stability has getting to be a more and more pressing issue for me.

Vio’s writing about Marx’s text is something i’ve been thinking about a lot recently as well. Work in our modern capitalist society is not rewarded as it was previously because of rising prices, stagnating incomes, and not enough employee protection laws.

I really like what was wrote in the interviews about being used as a tool by the company they’re working for. At its core companies do see their employees as tools and don’t care for the free will of their employees if it doesnt work with their profit goals as a company. Being on the top level of a company is the only way to explore the free will of your work but there is still a lot of pressure to please investors.

I can really see now how ITP is a big step forward for Vio to truely explore her work personally and artisticly. I think it was hard for me to see that coming from the opposite side of things. But if I end up in big tech at some point I think I will have similar feelings after a while. It’s really good that im getting this perspective now so I can navigate that better in my own life.

Week 2:

Potential Thesis Interviews:

Law Professors:

https://steinhardt.nyu.edu/people/charles-sanders

https://steinhardt.nyu.edu/people/george-stein

DJ Professors:

https://steinhardt.nyu.edu/people/delia-martinez

UI/UX Professors:

Conceptual Ideation Professors:

Tom Igoe

Dan O’Sullivan

ETERNA is:

an open-share Music Streaming platform and artists and listeners

open-share = open for usage, but with a requirement to share royalties

a DJ Tool

a Sampling tool

a Social app for music fans

DJ livestreaming platform

What i should focus on for thesis / portfolio:

DJ Tool / Sampling Tool

User Experience / User Profiles

Social aspects

Music streaming codec / livestreaming